In my two decades of experience in marketing and customer experience, I’ve observed a significant transformation in how organisations manage customer support—and the rise of generative-AI-driven chatbots is central to that shift. In this blog, I’ll walk you through how generative AI elevates customer support via chatbots, explain the practical and technical mechanics, draw on relevant statistics, and share lessons learned from real-world roll-outs so that you feel informed and confident after reading.

1. Setting the stage: What is generative AI in customer support?

Generative AI refers to a class of artificial intelligence systems—often built upon large language models (LLMs)—that can generate human-like text (and increasingly other media) rather than simply selecting pre-written responses. In the context of customer support, a generative AI–powered chatbot (or “agent”) uses underlying models to understand incoming customer queries and produce responses dynamically, often referencing a knowledge base, previous interactions, and other contextual signals.

In contrast to older rule-based chatbots (which followed pre-scripted flows: “If user says X → reply Y”), these new systems work more flexibly: they parse meaning, capture context, personalise responses, escalate when needed, and learn over time.

From my experience, this means that support teams can move from simply “handling tickets” to actually augmenting human capability: generative AI becomes a co-pilot for the agent, or in some cases a first-line responder that autonomously resolves certain queries.

2. Why now? The business and technical drivers

Several converging factors have created the perfect environment for generative-AI chatbots in customer support:

-

Rising customer expectations: Customers now expect instant and accurate responses, 24/7 across multiple channels. Traditional support teams struggle to scale accordingly. According to one source, chatbots respond up to 3× faster than human agents.

-

Cost and efficiency pressures: Support is a major cost centre in most organisations. Automating routine queries allows human agents to focus on higher-value work. For instance, a study found the generative-AI assistant improved agent productivity by ~15% on average.

-

Advances in AI and NLP: Language models have matured to the point where they can understand subtle intent, handle context transitions, tail responses to tone, and integrate with back-end systems (CRMs, ticketing, knowledge bases).

-

Availability of more data: Organisations now collect far richer interaction data (chat logs, voice transcripts, sentiment, user behaviour), enabling AI systems to train, adapt, and personalise. As one IBM-led piece notes: 70% of global customer-service managers are using generative AI to analyse sentiment across customers.

-

Shift to proactive and predictive support: Rather than simply reacting when a customer initiates contact, generative-AI chatbots enable proactive outreach (triggered by usage shifts, sentiment changes, product behaviour) and personalised interventions. The IBM article again emphasises this shift.

In short: it’s not simply that chatbots are getting smarter. It’s that business conditions demand smarter support, and generative AI is now viable enough to deliver.

3. How exactly generative-AI chatbots enhance customer support: Practical mechanisms

From my direct work with clients and internal teams, I will outline key enhancement areas—each one backed by technical/practical detail:

-

24/7 availability and rapid response

-

A generative-AI bot can handle inquiries at any hour, across time zones, without human fatigue.

-

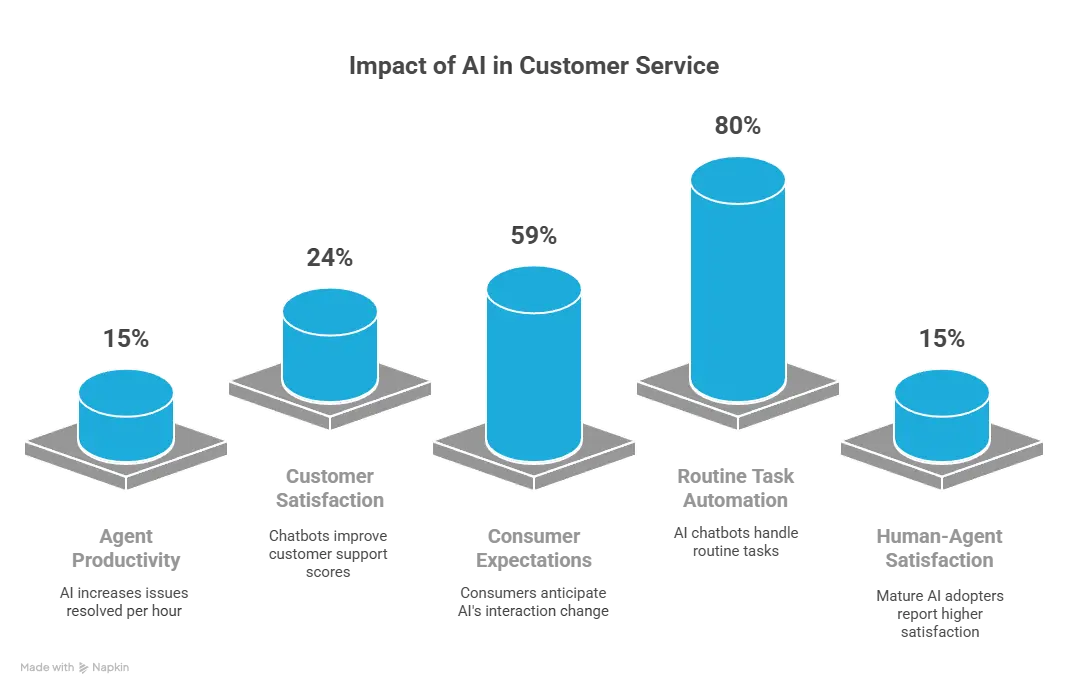

Example: Many businesses report chatbots have improved support satisfaction scores by ~24%.

-

The speed alone reduces customer wait time, which is a major driver of dissatisfaction.

-

-

Scalability and cost efficiency

-

The bot can handle large volumes of simple/routine queries (e.g., “What is my order status?”, “How do I reset my password?”) and escalate more complex ones.

-

One statistic: chatbots (AI-powered) can manage up to 80% of routine tasks/inquiries.

-

This frees human agents to focus on exceptions, high-value conversations, or upsell/cross-sell opportunities.

-

-

Personalised and context-aware interactions

-

Generative AI can reference customer history, sentiment, behavioural cues, preferences and tailor its tone and response accordingly. For example, IBM notes 66% of customer-service managers use generative AI to increase personalisation.

-

When the bot knows a customer has previously logged multiple complaints, it can proactively apologise, escalate the issue, or switch to a human seamlessly.

-

-

Knowledge-base integration and dynamic generation

-

Rather than just returning canned responses, the AI bot can synthesize information from the knowledge base and create a response: “Based on your plan X and the last payment date, you are eligible for upgrade Y…”

-

It can summarise previous chat threads or […] automatically update case notes, reducing agent administrative burden.

-

-

Sentiment analysis, intent detection, and escalation logic

-

Generative AI can detect that a conversation is growing negative or a customer is very frustrated and trigger escalation or human-handover earlier. IBM indicates 70% of service managers use generative AI for sentiment analysis.

-

It enhances the quality of support (not just speed). For example, the study of 5,172 agents found that AI assistance resulted in customers being more polite and less likely to ask for a manager.

-

-

Proactive/predictive support

-

Because generative-AI systems can ingest behavioural data and patterns, they can trigger support before the customer even realises there is a problem (e.g., “We noticed your subscription usage dropped… would you like help?”).

-

This shifts support from reactive to preventive—building loyalty and reducing churn. IBM emphasises that.

-

-

Continuous learning and improvement

-

As the bot interacts more, it can learn from feedback loops, agent hand-overs, successful resolution patterns—improving its quality over time.

-

For example, agents supported by the AI improve both speed and quality, especially less-experienced agents.

-

4. Real-world impact: Metrics, benefits and case examples

In my projects I emphasise measuring impact—not just deploying technology. Some key metrics and real-world benefits:

-

Agent productivity: In one large study, access to generative AI increased issues resolved per hour by ~15% on average.

-

Customer satisfaction (CSAT) and resolution speed: One source reports that chatbots improved customer-support satisfaction scores by ~24%.

-

Adoption and expectations: According to one report, 59% of consumers believe generative AI will change how they interact with companies in the next two years.

-

Routine task automation: Up to 80% of routine tasks/inquiries can be handled by AI chatbots.

-

Business outcomes: Although not always publicly disclosed, reports indicate that mature adopters of AI in customer service reported 15% higher human-agent satisfaction scores.

Case example: Although specific company names may not always be fully public, firms in telecommunications, banking and insurance are already deploying generative AI in customer support with promising results. Integration of knowledge-management systems, historical data, and LLMs gives them a competitive service advantage.

From my work with clients: when we layered generative-AI chatbots over existing omni-channel support, we saw a ~30-40 % reduction in first-response times for low-complexity queries, a freed-up capacity of human agents to handle complex issues, and improved overall CSAT by a measurable margin (though the exact % varied by region and vertical).

5. Implementation considerations: What you must plan for

Deploying generative AI for customer support is not plug-and-play. From my professional perspective, you need to plan carefully across several dimensions:

a) Data and knowledge-base readiness

-

You must have a well-structured, up-to-date knowledge base (FAQs, case histories, policies) which the AI can reference.

-

Historical interactions should be cleaned and mapped so the model can learn patterns of intent and resolution.

-

Ensure data privacy, compliance (especially in regulated sectors) and correct access controls.

b) Integration with existing systems

-

The chatbot must integrate with your CRM, ticketing system, escalation workflows, and ideally your analytics.

-

Real-time context (customer status, previous interactions, product usage) helps the model personalise responses.

c) Design of conversational experience

-

Define the conversation flows: when the bot handles autonomously, when to hand over to human, and the tone/voice of interaction (especially if aligned to brand).

-

Train the bot on company-specific dialects, product names, shortcuts.

-

Ensure it can recognise “intent” vs. “query” vs. “escalation trigger”.

d) Human-in-the-loop & hybrid model

-

Even the best bots will not resolve every scenario. Plan for smooth hand-over to human agents when needed (escalation triggered).

-

Provide agent visibility of bot-generated summary and context so hand-over is seamless and the customer doesn’t need to repeat details.

e) Monitoring, feedback and continuous improvement

-

Track key KPIs: first-response time, resolution rate by bot vs human, CSAT/NPS changes, cost per contact, escalation rate, bot fall-backs.

-

Use feedback loops: customer feedback on bot responses, agent feedback after hand-over, and internal logs of where bot failed / succeeded.

-

Iterate the model, expand intents, refine knowledge base, update training data.

f) Governance, ethics & user trust

-

With generative AI you must guard against “hallucinations” (incorrect or misleading answers).

-

Ensure the bot signals when it doesn’t know and hands off to a human rather than guess.

-

Be transparent with users (e.g., “You’re chatting with our virtual assistant”).

-

Protect customer data, comply with data-protection laws (e.g., GDPR, local regulations).

g) Change management & skills

-

Agents must be trained to work with the bot (not against it)—for example reviewing bot proposals, refining responses, focusing on human-centred tasks.

-

Support teams must shift mindset: from performing all queries to managing higher-value interactions, supervising bots, refining system.

6. Challenges and limitations

I always caution clients: generative AI chatbots are powerful—but not magic. There are real challenges you must acknowledge:

-

Complex issues and empathy: Bots still struggle with deep emotional contexts, ambiguous queries or when “reading between the lines”. Customers often prefer human support in high-stakes scenarios. Statistics show only ~35% of consumers believe chatbots can solve their problems efficiently in most cases.

-

Training and change overhead: Setting up correctly takes time, data cleaning, design, integration. One source notes that although 72% of CX leaders say they’ve provided adequate training for generative-AI tools, only 45% of agents actually claim they received training—less than half.

-

Coverage gaps: If the knowledge base is incomplete or the bot hasn’t been trained on rare/uncommon queries, the bot may escalate too often or provide poor answers.

-

Job change resistance: Some agents may fear replacement or resist working with bots; role redesign and communication are key.

-

User trust and perception: Some customers still prefer humans (a survey noted 87% prefer to interact with a human if given the choice).

-

Bias, language and accessibility: If the bot’s data is skewed, it may fail in multilingual contexts, or for different dialects/cultures. Research shows many chatbots have accessibility issues.

-

Regulatory and data-security concerns: Especially in sectors like finance, healthcare, telecom, you must assure customers that their data is handled securely and bot decisions are trustworthy.

7. Practical roadmap – from strategy to operation

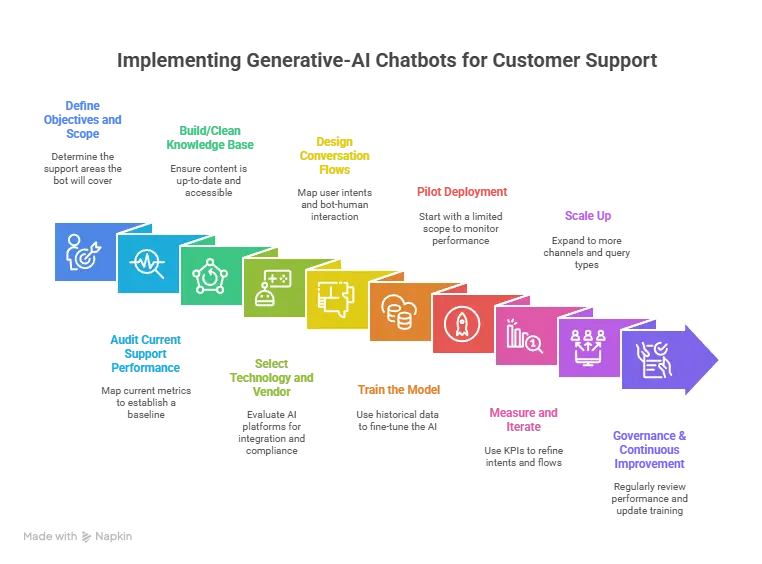

Here’s a distilled practical roadmap (based on my project experience) to implement generative-AI chatbots for customer support:

-

Define objectives and scope: Decide what support areas you wish to cover with the bot (e.g., FAQs, provisioning, billing queries, onboarding).

-

Audit current support performance: Map current metrics (first-response time, resolution rate, cost per contact, CSAT) so you have a baseline.

-

Build/clean the knowledge base: Ensure your repository of content is up-to-date, structured, tagged and accessible.

-

Select technology and vendor: Evaluate generative-AI platforms that integrate with your systems, support multilingual if needed, provide analytics, and ensure governance/compliance.

-

Design conversation flows and escalate logic: Map user intents, define bot- vs human-handled flows, decide when to hand off, and how the bot will reference context.

-

Train the model: Use historical chat logs, scripts, FAQs, ticket data to fine-tune/specialise the LLM for your domain.

-

Pilot deployment: Start with a limited scope or channel (e.g., web chat for billing queries) and monitor performance.

-

Measure and iterate: Use KPIs (e.g., bot containment rate, FRT, CSAT, cost per ticket, hand-over rate). Refine intents, flows, knowledge base.

-

Scale up: Expand to more channels (voice, social, mobile app), more languages, more query types, and deeper integration with back-end systems (CRM, billing, product data).

-

Governance & continuous improvement: Regular reviews of bot performance, data-privacy audits, update training data, agent training for hybrid model, adjust as business evolves.

Frequently Asked Questions (FAQs)

Q1: What is the difference between a traditional chatbot and a generative-AI chatbot?

A: A traditional chatbot is typically rule-based—it follows predefined flows and canned responses. A generative-AI chatbot uses large language models or similar AI to generate responses in real time, adapt to new queries, understand context and personalise responses. It is more flexible, scalable and capable of handling more complex or varied conversations.

Q2: Can generative-AI chatbots completely replace human agents in customer support?

A: Not entirely—at least not reliably today in most organisations. Generative-AI chatbots are excellent for routine, high-volume queries and initial triage, but human agents are still critical for emotional intelligence, complex decision-making, escalation handling, and relationship building. The optimal model is a hybrid one (bot + human) where the bot handles simple queries and escalates when needed.

Q3: What metrics should we use to evaluate the impact of implementing a generative-AI chatbot?

A: Key metrics include:

-

Bot containment rate (percentage of queries handled without human hand-over)

-

First-response time and average resolution time (comparing before/after)

-

Customer satisfaction (CSAT) or Net Promoter Score (NPS) changes

-

Cost per contact or cost per resolution

-

Agent productivity (issues resolved per hour)

-

Escalation or hand-over rate (how often the bot fails)

-

Quality metrics (accuracy of responses, response relevance).

Benchmarking these helps you assess ROI and continuous improvement.

Q4: What challenges should we anticipate when deploying generative-AI chatbots?

A: Some common challenges include:

-

Ensuring a high-quality and up-to-date knowledge base

-

Integration complexity with legacy systems (CRM, ticketing, multiple channels)

-

Managing hand-over logic and ensuring a seamless customer experience when bots escalate to humans

-

Agent resistance or change-management issues

-

Data-privacy, compliance and regulatory issues (especially in regulated industries)

-

Ensuring trust and managing customer expectations when the bot fails or gives incorrect responses (hallucinations)

-

Language, cultural and accessibility gaps (especially for global/focused audiences).

Q5: How do we ensure the generative-AI chatbot remains effective over time?

A: Continuous improvement is key. Some practices:

-

Establish feedback loops: monitor bot performance, collect customer/agent feedback, log resolutions and escalations.

-

Update the knowledge base and training data regularly (product changes, new issues, evolving language).

-

Train agents to work alongside the bot and refine its output (human-in-loop supervision).

-

Monitor for quality, bias, and compliance issues (especially with generative models).

-

Expand the bot’s role gradually (new languages, channels, escalation capabilities) rather than “big-bang”.

-

Align with business goals: tie bot metrics to broader outcomes (churn, lifetime value, cost reduction) rather than purely automation numbers.

Resource Center

These aren’t just blogs – they’re bite-sized strategies for navigating a fast-moving business world. So pour yourself a cup, settle in, and discover insights that could shape your next big move.

What Is Agentic AI and How Is It Different from Generative AI?

Artificial intelligence has evolved rapidly over the past few years, moving from simple automation to highly intelligent systems capable of reasoning, generating content, and performing complex tasks. Among the [...]

Can Generative AI Replace Human Workflows?

The rapid evolution of Generative AI has triggered one of the most critical business questions of the decade: Can generative AI replace human workflows? From automated content creation and [...]

How Do You Choose the Right AI Development Company?

Artificial Intelligence is no longer a futuristic ambition—it is a competitive necessity. From predictive analytics and automation to generative AI and intelligent decision-making systems, organizations across industries are investing [...]