In our work with software teams and offshore development units, we’ve witnessed how artificial-intelligence tools are gradually transforming the way code is written, reviewed and maintained. With the launch of GitHub’s new platform called Agent HQ, we may be at a tipping point. In this article I will walk you through what Agent HQ is, why it matters, how it works in practical developer workflows, the benefits and caveats from a technical/managerial perspective, and what this means for the software-engineering ecosystem going forward.

What is Agent HQ?

Agent HQ is a new initiative by GitHub that creates a “mission control” for code-centric AI agents. Rather than relying on a single assistant, the platform enables multiple AI coding agents (from various vendors) to integrate directly within GitHub’s environment.

In simple terms, instead of turning to one AI-tool for code suggestions, you and your team can deploy a suite of agents — from OpenAI Codex to Claude to others — all orchestrated in one place.

From our experience in managing development teams and offshore delivery, this reflects two critical shifts:

-

AI in software is no longer just autocomplete — it’s becoming an orchestrated set of agents.

-

Governance, workflow integration and parallelism (multiple agents working a task) are now becoming enterprise-level concerns. Agent HQ addresses both.

Why does it matter? The drivers behind the move

1. The rising complexity of AI coding assistance

In the past few years, developers tried out features like code completion, natural-language “ask the codebase” tools, and single-agent assistants. But as teams matured, ask your-LM-and-hope-for-the-best began showing friction: task tracking, accountability, code ownership, compliance. Agent HQ brings a unified dashboard and control plane.

2. Multi-agent era

Agent HQ enables multiple AI agents (from different vendors) to be used in parallel for the same task, giving developers choice and resilience. For example, GitHub mentions that they will integrate agents from OpenAI, Anthropic, Google, xAI and others. This is a recognition that no single model will dominate, so offering a platform-agnostic orchestration layer is strategic.

3. Enterprise governance & metrics

From our vantage as strategists, one of the major blockers to AI adoption in larger organisations is control: access, model drift, code quality, auditability. Agent HQ offers dashboards, metrics, permissions and governance features. Having a command-centre ensures that the AI agents become part of the software delivery chain rather than a side experiment.

4. Workflow integration

The meaningful shift is from “AI as a tool you open when you need it” to “AI is embedded in how you plan, code, review, deploy”. Agent HQ introduces features like Plan Mode (generate step-by-step tasks), deeper integrations in IDEs, and more seamless agent invocation. This reduces context switching and increases productivity.

5. Statistics that matter

GitHub reports that their developer base just hit ~180 million developers, growing at their fastest rate ever. Also, GitHub states that many of the new-joiner developers adopt Copilot (their earlier AI assistant offering) very quickly — signalling that AI tools are becoming a default expectation.

From an operational perspective this means: if your organisation is still treating AI support as a niche optional plugin, you’re falling behind. Agent HQ signals that AI-driven workflows are now mainstream in coding organisations.

How does Agent HQ work in practice?

Let me share how I see implementing this from the developer team view:

-

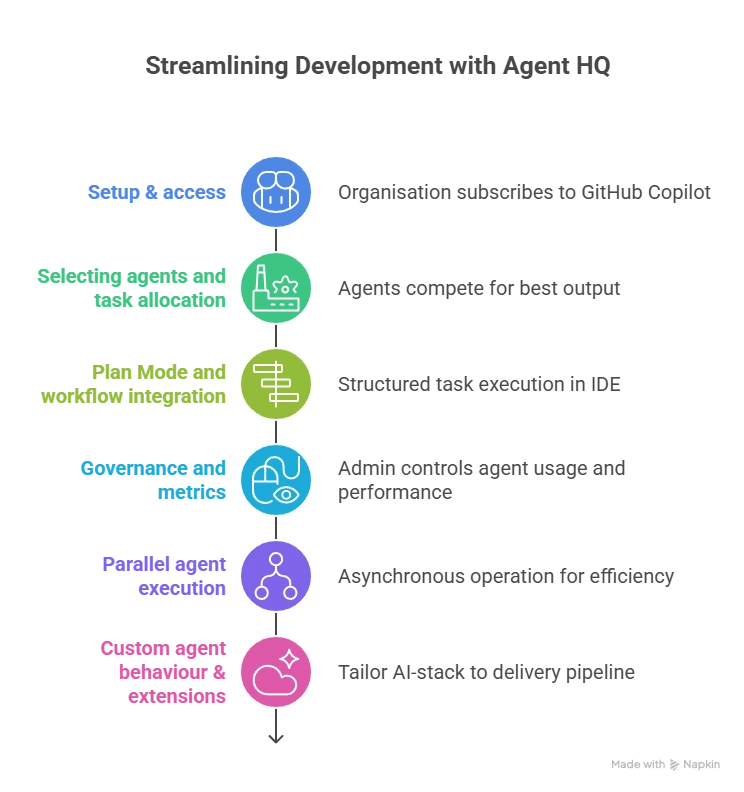

Setup & access

An organisation subscribes to GitHub Copilot (or higher tier) and gains access to the Agent HQ dashboard. The dashboard becomes the central place to “assign an agent” to a task, monitor progress, manage permits and metrics. -

Selecting agents and task allocation

Suppose you have three agents available: Codex (OpenAI), Claude (Anthropic), and another from xAI. You can assign the same bug-fix or feature generation task to all three, let them run in parallel, and then choose the best output. This enables agent-competition and fosters improved outputs.

In our offshore delivery context, this means: you keep the human review step but accelerate the candidate generation. -

Plan Mode and workflow integration

Before any code is generated, in VS Code or another IDE you can initiate “Plan Mode” where the agent outlines the steps (e.g., design, code draft, test, integrate). Then the agent carries out each sub-task. This brings structure rather than ad-hoc prompts. -

Governance and metrics

On the enterprise side, the admin can control which agents are allowed, track usage by team/repo, get analytics on agent performance, code review drift, maintainability scores. Agent HQ also supports code-quality metrics beyond just vulnerability scanning.

For example: you might track “% of agent-generated code that required revision” or “average time saved per task”. -

Parallel agent execution

Rather than a linear chain (human → one assistant → human review), you now have: human assigns task → multiple agents operate asynchronously → human reviews best output and merges. This parallelism is a key contrast with earlier models. -

Custom agent behaviour & extensions

Teams can define their own “agent behaviour” files (AGENTS.md or config), plug in specialist agents (for UI code, backend code, tests), and integrate with external tools (Slack, JIRA, Linear). This means you tailor your AI-stack to your delivery pipeline.

Benefits & Practical Implications (From my 30+ years’ engineering strategy lens)

Increased productivity

By reducing repetitive coding, boilerplate generation and initial draft work, teams can focus more on higher-value tasks (architecture, integration, optimisation). The parallel agent model further speeds output.

Better quality and maintainability

Because Agent HQ integrates code quality tools, metrics and review-gates, the risk of “AI-mess” becomes lower. We’ve seen in large-scale projects that uncontrolled auto-generated code can lead to tech-debt; Agent HQ’s governance reduces that risk.

Developer empowerment

Rather than being replaced by AI, developers are empowered to choose and collaborate with agents, making them more efficient. This aligns with our offshore-team model: we train junior/mid-level devs to use AI as co-pilot, not substitute.

Vendor flexibility and future-proofing

Since Agent HQ supports multiple agents, you’re not locked into one vendor. If one model becomes dominant or cheaper, you can switch. Strategically this lowers risk of dependency.

Governance, compliance and audit

In regulated industries (e.g., energy, petro-engineering, financial services) our teams must satisfy audits, traceability, code review logs. Agent HQ provides the dashboards and control plane to maintain that discipline.

Strategic Implications for Organisations & Offshore Delivery

-

For product companies: Embedding Agent HQ means that your offshore development team can scale faster. We typically advise aligning AI-agent strategy with the delivery pipeline and ensuring governance from day one (code review steps, metrics, KPIs).

-

For offshore vendors: You should train your developers in “agent-driven development” as a core competency. This becomes a differentiator.

-

For C-suite & heads of engineering: Treat this as infrastructure investment — not just “tool for developers” but “platform for future code-organisation”. Budget accordingly.

-

For risk management: Define policies for how AI-agents are used, how outputs are reviewed, how IP and compliance is managed. Agent HQ supports governance but doesn’t replace policy.

Frequently Asked Questions (FAQs)

1. What exactly can Agent HQ do in a coding workflow?

Agent HQ enables developers to assign tasks to multiple AI coding agents in parallel, review their outputs via a central dashboard, pick the best output, and manage agent usage, metrics, permissions and governance in one place.

2. Which AI coding agents are supported?

GitHub has announced support for agents from vendors including OpenAI (Codex), Anthropic (Claude), Google, xAI, Cognition and others — all within the Agent HQ ecosystem.

3. Does using Agent HQ replace human developers?

No — in fact, from our view it enhances developer productivity. The human developer remains central for architecture, review, context, integration and quality assurance. The AI agents assist with drafting, boilerplate, and repetitive tasks, so developers can focus higher up the value chain.

4. What kind of governance or quality controls does Agent HQ offer?

Agent HQ offers a “control plane” for AI agents: you can manage which agents are allowed, track usage metrics, audit code generated, enforce review steps, integrate code-quality tools (e.g., maintainability, reliability) and tie into existing CI/CD pipelines.

5. How should organisations prepare to adopt Agent HQ?

-

Train development teams in “agent-first” workflows (planning, prompt design, review)

-

Define policies for agent usage, review steps and IP/security

-

Integrate your CI/CD pipelines and code-quality tools to accept agent-generated drafts

-

Monitor metrics: agent performance, time saved, code revision requirement

-

Pilot one project first, gather data, then scale.

Resource Center

These aren’t just blogs – they’re bite-sized strategies for navigating a fast-moving business world. So pour yourself a cup, settle in, and discover insights that could shape your next big move.

What Is Agentic AI and How Is It Different from Generative AI?

Artificial intelligence has evolved rapidly over the past few years, moving from simple automation to highly intelligent systems capable of reasoning, generating content, and performing complex tasks. Among the [...]

Can Generative AI Replace Human Workflows?

The rapid evolution of Generative AI has triggered one of the most critical business questions of the decade: Can generative AI replace human workflows? From automated content creation and [...]

How Do You Choose the Right AI Development Company?

Artificial Intelligence is no longer a futuristic ambition—it is a competitive necessity. From predictive analytics and automation to generative AI and intelligent decision-making systems, organizations across industries are investing [...]