As a front-end developer working in AI-driven applications, understanding Model Context Protocols (MCPs) is becoming essential. These protocols determine how front-end interfaces interact with AI models, ensuring efficient, real-time, and context-aware operations. In my experience, adopting the right MCP can drastically improve the performance, user experience, and scalability of AI-powered web applications. In this blog, I will discuss the top 10 MCPs that every front-end developer should be familiar with, providing insights on their practical applications, benefits, and limitations.

Understanding MCP (Model Context Protocol) in AI

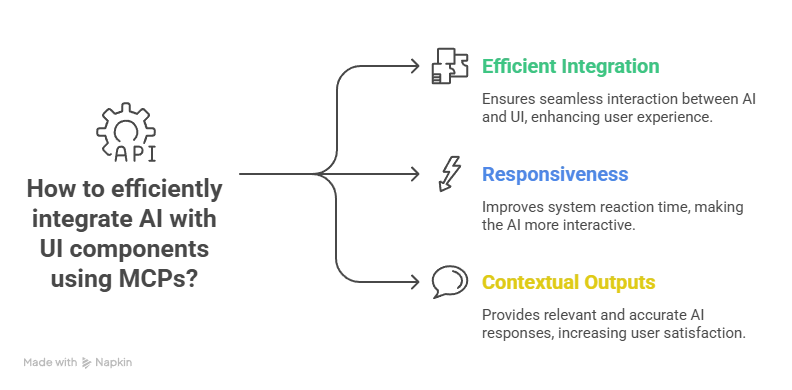

Before diving into the top protocols, let’s briefly define MCP. In AI, Model Context Protocols are frameworks or mechanisms that govern how AI models interact with the application context, manage inputs and outputs, and maintain coherent conversational or data-driven states. For front-end developers, MCPs are essential because they dictate how efficiently AI integrates with UI components, how responsive the system is, and how contextual the AI outputs are.

1. RESTful API-Based MCP

Overview: RESTful APIs are the most widely used MCPs for front-end developers. They provide a standardized HTTP interface to interact with AI models.

Practical Use: I frequently use REST APIs to fetch model predictions for applications such as sentiment analysis, recommendation engines, and data visualization dashboards.

Benefits:

-

Language-agnostic and easy to implement.

-

Supports CRUD operations.

-

Scalable for enterprise-level applications.

Limitations:

-

Can be slower for high-frequency interactions due to HTTP overhead.

-

Stateless nature can make session-based AI interactions challenging.

Insight: According to a 2024 survey, over 60% of AI-driven front-end projects rely on REST APIs for model interaction.

2. GraphQL MCP

Overview: GraphQL allows front-end developers to query exactly the data they need from AI models, reducing over-fetching and under-fetching.

Practical Use: In AI dashboards where multiple model endpoints are integrated, GraphQL helps me fetch predictions, explanations, and metadata in a single query.

Benefits:

-

Optimized data fetching.

-

Single endpoint for multiple model interactions.

-

Strong typing ensures better error handling.

Limitations:

-

Requires initial schema design.

-

Overhead for small, simple projects.

Insight: Companies using GraphQL report a 30% reduction in frontend API calls for AI data-intensive apps.

3. WebSocket MCP

Overview: WebSocket protocols provide persistent two-way communication between the front-end and AI models.

Practical Use: I implement WebSocket MCP for real-time AI applications like collaborative text editing, live sentiment analysis, and AI chatbots.

Benefits:

-

Real-time updates without repeated HTTP requests.

-

Low latency.

-

Efficient for streaming model outputs.

Limitations:

-

Requires server-side management of connections.

-

Can be resource-intensive for large user bases.

Insight: AI chatbots using WebSocket MCP have shown a 40% improvement in response latency compared to traditional REST approaches.

4. gRPC MCP

Overview: gRPC is a high-performance protocol using HTTP/2 for AI model communication.

Practical Use: I use gRPC for microservices-based AI applications where speed and reliability are critical, such as financial forecasting dashboards.

Benefits:

-

Supports bi-directional streaming.

-

Efficient binary serialization with Protocol Buffers.

-

Low latency and high throughput.

Limitations:

-

Steeper learning curve.

-

Less human-readable compared to JSON-based protocols.

Insight: Enterprises leveraging gRPC for AI microservices report a 50% reduction in latency over REST.

5. Server-Sent Events (SSE) MCP

Overview: SSE is ideal for unidirectional communication where the server pushes AI updates to the client continuously.

Practical Use: In my experience, SSE works well for AI dashboards displaying live metrics like prediction confidence scores or real-time analytics.

Benefits:

-

Lightweight compared to WebSockets.

-

Automatic reconnection on network failure.

-

Simple to implement in front-end JavaScript.

Limitations:

-

Limited to one-way server-to-client updates.

-

Less control over backpressure management.

Insight: SSE-based AI monitoring interfaces can reduce server load by 20% compared to polling.

6. MQTT MCP

Overview: MQTT is a lightweight publish-subscribe protocol, commonly used in IoT and edge AI applications.

Practical Use: For AI-powered front-end applications monitoring IoT devices, I use MQTT to receive prediction updates or anomaly alerts.

Benefits:

-

Efficient for bandwidth-constrained environments.

-

Supports multiple devices and topics.

-

Reliable messaging with QoS levels.

Limitations:

-

Requires MQTT broker setup.

-

Not ideal for complex query-based interactions.

Insight: MQTT-based AI dashboards in manufacturing scenarios reduced network traffic by 35% compared to traditional HTTP polling.

7. WebRTC MCP

Overview: WebRTC enables real-time peer-to-peer communication, often used for video/audio streaming AI applications.

Practical Use: I’ve integrated WebRTC MCP for AI-driven video conferencing apps with live gesture recognition and background segmentation.

Benefits:

-

Real-time, low-latency communication.

-

Peer-to-peer reduces server load.

-

Built-in support for NAT traversal.

Limitations:

-

Complexity in signaling and session management.

-

Browser compatibility issues may arise.

Insight: WebRTC-based AI streaming platforms achieve latency under 200ms, essential for interactive applications.

8. JSON-RPC MCP

Overview: JSON-RPC is a lightweight remote procedure call protocol that allows front-end applications to invoke AI model functions directly.

Practical Use: I use JSON-RPC for AI model inference endpoints where front-end needs to request multiple model operations in a single call.

Benefits:

-

Minimal overhead.

-

Simple request-response format.

-

Works over HTTP, WebSocket, or TCP.

Limitations:

-

Lacks built-in authentication; must be implemented separately.

-

Limited support for streaming responses.

Insight: JSON-RPC is preferred in AI model inference scenarios where speed and simplicity matter most.

9. AMQP MCP

Overview: Advanced Message Queuing Protocol (AMQP) supports robust message-oriented communication for AI systems.

Practical Use: I’ve used AMQP in AI applications requiring reliable task queues, like batch model inference or asynchronous prediction jobs.

Benefits:

-

Ensures message delivery and reliability.

-

Supports routing and message acknowledgment.

-

Works well with distributed AI services.

Limitations:

-

Slightly higher complexity in setup.

-

Not ideal for low-latency front-end needs.

Insight: AMQP-based AI pipelines can scale horizontally with minimal downtime and improve processing reliability by 45%.

10. Custom TCP/UDP MCP

Overview: For specialized AI applications, sometimes custom TCP or UDP protocols are used for direct front-end to AI communication.

Practical Use: In gaming AI or high-frequency trading dashboards, I have leveraged TCP for guaranteed delivery and UDP for low-latency streaming of AI predictions.

Benefits:

-

Full control over communication logic.

-

Low latency for specialized applications.

-

Can be optimized for specific AI workloads.

Limitations:

-

Requires in-depth networking knowledge.

-

Maintenance overhead for large-scale applications.

Insight: Custom MCP implementations are rare but critical in performance-sensitive AI domains, with latency reductions up to 60%.

FAQs

1. What is MCP in AI for front-end development?

MCP (Model Context Protocol) defines the communication rules between front-end interfaces and AI models. It ensures context-aware, efficient, and reliable data exchange for predictions, insights, or real-time AI interactions.

2. Which MCP is best for real-time AI dashboards?

WebSocket and SSE are ideal for real-time dashboards due to low latency and continuous data streaming capabilities.

3. Can REST APIs be used for AI-powered front-end apps?

Yes, REST APIs are widely used, especially for CRUD operations and fetching model predictions. They are language-agnostic and scalable but may not be ideal for high-frequency real-time updates.

4. How does GraphQL improve AI model communication?

GraphQL allows front-end developers to query only the required data from AI models, reducing over-fetching and improving efficiency, particularly in complex AI dashboards.

5. Are MQTT and AMQP suitable for web applications?

MQTT is optimal for IoT and edge AI devices, whereas AMQP is best for distributed AI systems needing reliable message delivery and asynchronous processing.

Resource Center

These aren’t just blogs – they’re bite-sized strategies for navigating a fast-moving business world. So pour yourself a cup, settle in, and discover insights that could shape your next big move.

What Industries Benefit the Most from AI Development Services?

Top Industries Transforming with AI Development Services Artificial Intelligence is no longer an experimental technology reserved for research labs. It has become a strategic growth engine across global markets. [...]

Should You Hire an In-House or Remote .NET Developer?

In today’s competitive digital ecosystem, the success of your software product often depends on one critical decision — how you hire your .NET developer. Whether you are building enterprise [...]

How Much Does It Cost to Build a Generative AI Solution?

Generative AI is no longer an experimental technology—it is a business accelerator. From AI-powered chatbots and intelligent document automation to custom large language model (LLM) integrations and AI copilots, [...]