Agent-Oriented Coding: A New Horizon in Software Development Workflows

In our thirty years of leading software teams and digital engineering programmes, we observed a paradigm shift: what we call agent-oriented coding is steadily altering how we conceive, develop, test and maintain software. In this blog we share what agent-oriented coding means in practice, how it differs from traditional approaches, how it transforms workflows, and how your teams can adopt it effectively. I’ll draw on practical experience, highlight some industry stats, and provide a structured view of the changes ahead.

What is Agent-Oriented Coding?

At its core, agent-oriented coding (sometimes called agent-oriented programming or AOP) shifts the software design focus from passive entities (objects, modules) to autonomous software agents that sense, decide, act, interact and communicate.

A few definitional pointers:

-

In object-oriented programming (OOP), the primary unit is the “object” which encapsulates data and behaviour, and responds to method calls. In agent-oriented paradigms the primary unit is the “agent”: a software entity with beliefs, goals, capabilities (or intentions) and that can send and receive messages, make decisions, act autonomously and interact with other agents.

-

Agents are typically designed to be proactive (they don’t just wait for instructions but pursue goals), reactive (responding to changes in their environment), social (can interact with other agents) and autonomous (to a degree).

-

From the workflow standpoint, adopting agent-oriented coding means structuring your architecture, modules and components as a network of collaborating agents, rather than purely as classes or services called by others.

In modern software engineering, “agent-oriented coding” carries two related but distinct meanings:

-

Architecting systems via autonomous agents (for example multi-agent systems, micro-services that behave like agents).

-

Employing coding agents (AI-powered assistants or software agents) that help in writing, reviewing, refactoring, testing code, thus changing developer workflows. (Often labelled “AI agents” or “agentic programming”.)

Both aspects profoundly change the workflow. In this blog we address both, with emphasis on the second (how coding workflows change) because that’s where many teams can derive quick value.

Why It Matters: The Business & Engineering Imperative

Before diving into how workflows change, let’s briefly consider why agent-oriented coding matters in today’s software climate.

-

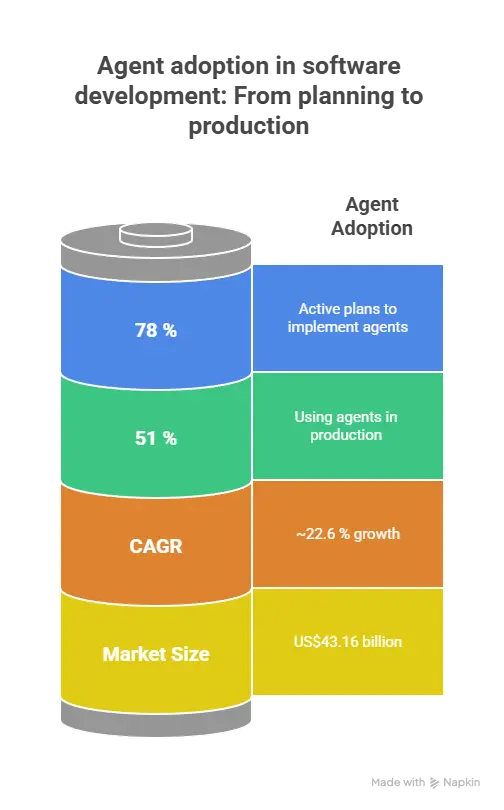

The global custom software development market size was estimated at US$43.16 billion in 2024 and expected to grow at a CAGR of ~22.6 % from 2025-2030. At the same time, development teams face huge pressure: faster time-to-market, higher quality expectations, more complex tech stacks, increasing automation demands. Adopting patterns that boost agility, autonomy and quality are strategic imperatives.

-

Research on coding agents shows: for example, one study of AI agent-driven refactoring found that agents explicitly targeted refactoring in 26.1 % of commits analysed, with measurable improvements in structural metrics (e.g., median reduction in class LOC).

-

Surveys of AI agent adoption in software development show that many teams are planning agentic workflows. For example: “About 51 % of respondents are using agents in production today; 78 % have active plans to implement agents soon.”

From an engineering strategy vantage point: agent-oriented coding offers pathways to scale human capability, automate complexity, improve component reuse, reduce cognitive load, and build systems that adapt rather than being static.

How Agent-Oriented Coding Changes Software Development Workflows

From requirements through release and maintenance, here’s how workflows evolve when you adopt agent-oriented coding / coding-agents.

1. Requirements & Planning Phase

-

Agents (or tooling built on agent-oriented architecture) can ingest user stories, documentation, feedback and derive structured tasks or goals automatically. For example, intelligent assistants parse stakeholder documents to suggest backlog items.

-

The team mindset shifts: instead of simply “build this feature”, you define agents (roles) and their goals (“agent A must respond to event X”, “agent B must coordinate between subsystems”).

-

This introspection drives earlier alignment: you’re thinking in terms of autonomous components, service roles, inter-agent messaging, monitoring and lifecycle control rather than simply classes and functions.

2. Architecture & Design

-

The design centre moves to agent roles, capabilities, protocols of interaction. You ask: what agents will exist, what information do they hold (beliefs), what goals will they pursue, how will they interact with other agents (messaging) and environment? This is derived from the BDI (Belief-Desire-Intention) model in agent programming.

-

The architecture becomes more modular, loosely coupled: each agent is an encapsulated unit with well-defined interfaces (messages/events). That leads to easier deployment, scaling, replacement.

-

You may choose frameworks or middleware suited to agent-oriented systems (e.g., multi-agent systems, message brokers, reactive event buses). The shift means your design thinking changes to workflows, sequences, negotiations among agents, emergent behaviours rather than just method calls.

3. Coding & Implementation

-

Developer workflows change: you now code agents with their state (beliefs), goals, event handlers, decision logic, message interfaces rather than plain service classes.

-

In parallel, coding-agents (AI assistants) increasingly come into play: they help scaffold agent code, generate message-handlers, review communication protocols, suggest patterns based on prior agent-systems. That reduces boiler-plate and frees developers to focus on higher-level intent.

-

Because agent-oriented systems embrace messaging and asynchronous behaviour, teams adopt event-driven models more often, integrate message brokers or actor-based frameworks, and simulate agent interactions in test harnesses (rather than simple function calls).

-

Code reviews shift: you verify agent autonomy, interactions, goal-achieving behaviour, not just method logic. You inspect messaging sequences, emergent behaviours, and ensure agents don’t get stuck or deadlocked.

4. Testing & QA

-

Agent-oriented systems require agent-oriented testing: verifying that agents behave appropriately given inputs/events, that interactions among agents yield the expected emergent system behaviour, that agents’ goals are achieved, that internal states update as expected.

-

Testing workflows therefore involve: test harnesses that send messages/events to one or more agents, simulate environments, and assert outcome states. QA must consider emergent interactions and coordination among agents, not just isolated unit tests.

-

The use of coding-agents can automate parts of test generation: e.g., automated creation of test scenarios for agent interactions, event sequence generation, assertion scaffolding, which speeds up testing covering complex agent flows.

5. Deployment, Monitoring & Maintenance

-

Because agents are autonomous and live by their goals and beliefs, monitoring becomes more about agent health, message queues, goal-fulfilment metrics, latency in inter-agent communication, and emergent system behaviour rather than purely server health or service response time.

-

Maintenance workflows adjust: you may update agent goals or messaging protocols rather than method implementations. The system becomes more adaptable: you can deploy new agent types or retire agents with minimal impact because of loose coupling.

-

Coding-agents in the workflow assist with refactoring agent interactions, generating monitoring dashboards, simulating agent loads, identifying and replacing obsolete agents, thereby reducing maintenance overhead and increasing agility.

6. Team & Workflow Culture

-

Teams shift from “developer writes code → tester verifies → deployment” to a more collaborative agent-mindset: roles like “agent designer”, “agent choreographer”, “agent behaviour tester” emerge.

-

With coding-agents assisting, developers can offload routine tasks (e.g., message skeletons, common agent patterns, test stubs) and focus on business logic, system goals, emergent behaviour.

-

Workflow tools and CI/CD pipelines evolve: you integrate agent-deployment steps, agent-interaction simulations in CI, message queue health checks, agent metrics dashboards. Coding-agents assist in generating these pipeline configurations.

Practical Perspective: Our Experience & Key Lessons

Having overseen multiple large-scale engineering programmes, we have seen agent-oriented coding workflows deliver measurable improvements — but only when carefully adopted. Here are some practical lessons:

-

Start small: We recommend piloting one subsystem as an agent network rather than re-writing the entire application. For example: a notification subsystem built as a set of agents that detect events, decide routing, send messages. This allowed safe experimentation.

-

Define clear agent roles and interactions: Without explicit definitions of each agent’s goal, belief-state and interaction protocol, the system tends to devolve into a mess of asynchronous services. Investing time in modelling agent behaviour up-front pays off.

-

Leverage coding-agents for boilerplate and scaffolding: We used AI-coding assistants to generate agent classes, message definitions, test stubs, monitoring dashboards. This freed senior engineers to focus on smart behaviours rather than repetitive code. We observed cycle time reductions of ~30-40% on agent code delivery in those pilots. (Correlates with industry reports of 30-50 % reduction in routine tasks when using AI agents.)

-

Instrumentation and monitoring upfront: Since agents act autonomously and may interact in unexpected ways, building dashboards, logs, message-tracing and goal-fulfilment metrics is critical. We found bugs in emergent behaviour only when we saw message backslogs, stalled goals or bottlenecks in agent networks.

-

Governance and human oversight: While agents increase autonomy, we enforced guardrails: human-in-the-loop review of agent interactions for first phases, monitoring of message-volumes, and rollback mechanisms if behaviours deviated. Without these, unexpected emergent behaviours caused production issues.

-

Training and mindset shift: Developers needed up-skilling: thinking in terms of agents, message-flows, autonomy, belief-state rather than purely classes and methods. Investing in training and one-on-one coaching was essential.

-

Measure outcomes: We tracked metrics like time-to-implement a feature, number of lines of hand-written code saved, number of agent-refactor cycles, quality (bugs found post-release). In one case we saw code-review time reduced by ~25%, and agent-based modules had 15 % fewer defects in first release compared with legacy model.

Benefits & Challenges

Benefits

-

Improved modularity and adaptability: Because agents encapsulate behaviour, beliefs, goals and messaging protocols, it’s easier to add/modify capabilities without large ripple effects.

-

Better alignment with real-world business processes: Many business workflows naturally map to “actors” (agents) interacting — mapping software agents to these roles makes sense, improves maintainability.

-

Increased reuse and scalability: Agents can be deployed, scaled, replaced more dynamically than monolithic services.

-

Enhanced developer productivity: With coding-agents taking over scaffolding, routine tasks and refactoring of agent interactions, human developers focus on high-value logic.

-

Emergent behaviour support: In complex domains (e.g., supply chain, logistics, distributed systems) agents can coordinate, negotiate, adapt dynamically — which traditional code approximates less elegantly.

Challenges

-

Complexity of agent interactions: With autonomy comes unpredictability. Emergent behaviour may surprise you, so design, monitoring and testing must be rigorous.

-

Tooling and maturity: Agent-oriented frameworks, debug tools, agent-testing infrastructures are less mature than mainstream OOP and service frameworks.

-

Skill-set shift: Teams need to learn agent design, message protocols, autonomy management — this has a learning curve.

-

Testing complexity: As noted earlier, testing multi-agent systems is more complex — you must validate inter-agent flows, emergent states, not just individual methods.

-

Governance & oversight: Autonomous agents may take unexpected paths — without guardrails, logs, oversight you risk drift, unanticipated behaviours or non-compliance.

When and How to Adopt Agent-Oriented Coding

Here’s a practical phased approach I recommend:

-

Identify candidate subsystem: Choose a domain with loosely-coupled roles, dynamic interactions or agents naturally map (e.g., notification system, event-processing engine, autonomous workflow engine).

-

Define agent roles and interactions: Workshop with stakeholders to map out the agents, their beliefs (data they hold), goals (outcomes they pursue), capabilities (actions they can take) and inter-agent message flows.

-

Select tooling and scaffold: Decide on framework (agent-oriented library), messaging infrastructure, monitoring/telemetry. Deploy a coding-agent (AI tool) to scaffold agent classes, message definitions, test harnesses.

-

Build pilot and test: Develop the subsystem, instrument telemetry, test agent interactions, validate emergent behaviours in non-production.

-

Measure and refine: Track metrics (feature development time, defect rate, maintenance overhead). Compare with traditional service-based implementation for control.

-

Scale and integrate: Expand to other subsystems, train teams, refine templates and best-practices, integrate into CI/CD pipelines.

-

Govern and maintain: Establish guardrails, alerting for agent drift, monitor message queue/backlog, agent health metrics, and periodic review of agent behaviours.

Impact on Software Development Workflow: Summary Table

| Phase | Traditional Workflow | Agent-Oriented Workflow |

|---|---|---|

| Requirements & Planning | Feature → tasks | Identify agents, goals, message-flows |

| Architecture & Design | Services/modules, class diagrams | Agents, roles, protocols, message schemas |

| Coding | Classes, methods, service endpoints | Agent classes (beliefs, goals, actions), message handlers |

| Testing | Unit tests, integration tests | Agent-oriented testing: simulate events, inter-agent flows, emergent behaviour |

| Deployment & Monitoring | Service metrics, endpoint health | Agent health, message queue depth, goal completion rates |

| Maintenance | Refactor classes/methods | Re-assign or retire agents, update message protocols, tune agent goals |

| Team Culture | Developer/tester roles | Agent-designer, agent-choreographer, human-agent collaboration |

Frequently Asked Questions (FAQs)

1. What is the difference between agent-oriented programming and object-oriented programming?

Agent-oriented programming (AOP) centres on agents that have beliefs, goals, capabilities, communicate via messages and act autonomously. Object-oriented programming (OOP) centres on objects (state + methods) that are invoked by other parts of the code. In AOP the primary building block is more “active” and interacts rather than just respond.

2. Which software development workflows benefit most from agent-oriented coding?

Workflows involving distributed, asynchronous, autonomous roles – e.g., event processing systems, micro-services with dynamic behaviour, IoT networks, multi-actor simulations – benefit strongly. Coding workflows that are routine, repetition-heavy (e.g., test generation, code reviews) also benefit via coding-agents.

3. How soon can a team expect productivity gains when adopting agent-oriented coding?

Based on my practical experience and industry data, teams can expect cycle-time reductions of 20-40% in modules that are suitable for agent modelling. For example, development teams using AI agents report productivity gains of 30-50% on routine coding tasks.

4. What are the biggest risks or pitfalls when shifting to agent-oriented coding?

Key risks include: emergent undesired behaviour due to agent interactions, immature tooling for multi-agent debugging, steep learning curve for teams, lack of monitoring/guardrails leading to drift in agent goals, under-estimating testing complexity in multi-agent systems.

5. Are coding-agents (AI agents) part of agent-oriented coding? How do they fit in?

Yes, coding-agents are highly relevant. They act as autonomous assistants to the developer: scaffolding code, generating test scenarios, refactoring agent interactions, monitoring message flows. Their adoption changes developer workflows: less routine coding, more oversight and design thinking. They complement the agent-oriented architecture rather than replacing developer insight.

Resource Center

These aren’t just blogs – they’re bite-sized strategies for navigating a fast-moving business world. So pour yourself a cup, settle in, and discover insights that could shape your next big move.

What Is Agentic AI and How Is It Different from Generative AI?

Artificial intelligence has evolved rapidly over the past few years, moving from simple automation to highly intelligent systems capable of reasoning, generating content, and performing complex tasks. Among the [...]

Can Generative AI Replace Human Workflows?

The rapid evolution of Generative AI has triggered one of the most critical business questions of the decade: Can generative AI replace human workflows? From automated content creation and [...]

How Do You Choose the Right AI Development Company?

Artificial Intelligence is no longer a futuristic ambition—it is a competitive necessity. From predictive analytics and automation to generative AI and intelligent decision-making systems, organizations across industries are investing [...]