In our thirty years of experience helping technology-driven companies bring advanced software products to market, we have repeatedly seen that launching an AI-driven application is both highly promising and fraught with unique risks. In this blog we share a structured, pragmatic guide for designing, building and launching such applications—highlighting best practices and common pitfalls — so you achieve real user value, scale sustainably and avoid avoidable failure modes. We also include some industry stats to underpin the urgency and opportunity of getting it right.

Best practices for launching an AI-driven application

Below are key practices we recommend, borne of multiple engagements in product development, data science, UX and operations.

2.1 Define clear user value and use case-fit

Before building sophisticated models, define the user problem clearly: what are the users trying to do, what pain point are you solving, and how will AI make it measurably better?

Avoid the trap of “let’s add AI because it’s trendy”. Focus instead on “what change does the application bring?”: e.g., “reduce manual classification from X hours to X/10” or “increase user retention by Y % via personalised recommendations”.

By grounding in real value, you can structure metrics around impact, not just model accuracy.

2.2 Data strategy, quality and governance

The old adage holds: “garbage in, garbage out”. For AI-driven apps you must ensure:

-

access to relevant data (internal or external) that actually supports your use case

-

data cleansing, labelling, annotation and versioning workflows

-

governance, privacy, security and ethics frameworks (especially if user data / PII is involved)

We recommend investing up-front in data pipelines and instrumentation. Without it you risk model drift, poor performance in production and regulatory risk.

2.3 Model–product integration and UX design

AI isn’t a black-box you simply drop in. You must integrate model outputs in ways that make sense to the user:

-

Use transparent feedback loops: show results, allow user correction, allow improvement.

-

Manage expectation: AI might produce suggestions, not perfect answers. Set the correct tone in UX.

-

Monitor latency, reliability and error cases: if model responses take too long, user experience suffers.

-

Ensure “fail gracefully” behaviour: missing data, out-of-distribution inputs, model uncertainty must be handled elegantly.

2.4 Infrastructure, scalability and monitoring

In many launches the model works in test, but fails in scale. We emphasise:

-

Build scalable infrastructure from launch (cloud compute, autoscaling, load balancing) rather than defer to scaling later.

-

Instrument metrics for usage, data drift, model performance, user feedback, errors.

-

Include logging, alerts and analytics so you can monitor in production, not just in dev.

-

Plan for retraining, versioning, rollback: models degrade or become stale; you must support iteration.

2.5 Cross-functional alignment and change management

Launching an AI app isn’t just a data science exercise—it requires alignment between product, engineering, UX, legal/compliance, operations and business stakeholders.

We recommend governance rituals: regular stand-ups, KPIs tied to business outcomes, user training (where needed), change management for user adoption. As one stat shows: 50% + of companies using AI report little or no training for staff.

A lack of training or alignment will amplify risk of abandonment.

2.6 Pilot, iterate, then scale

Rather than launching full-blown, launch a pilot or MVP that tests core hypotheses (user value, model accuracy, scalability). Use data from pilot to refine. Then scale once validated.

This incremental approach reduces risk and optimises resource allocation.

2.7 Ethical, legal and user-trust considerations

AI brings specific ethical and regulatory concerns: bias, fairness, transparency, data privacy, explainability. Unaddressed, these can derail user trust and brand reputation.

Ensure you:

-

identify and mitigate bias in training data/model

-

include transparency for users (e.g., “This recommendation was generated by AI”)

-

comply with data protection laws (GDPR, CCPA, regional equivalents)

-

monitor adversarial risk, model misuse and security vulnerabilities.

2.8 Post-launch feedback loop and continuous improvement

Launching is not the end—it’s the beginning of the lifecycle. You must:

-

collect user metrics (engagement, retention, ROI)

-

monitor for model drift, data distribution changes

-

update product roadmap based on usage insights

-

engage users for feedback and improvement

-

iterate design and model together (product ↔ data science synergy).

3. Common pitfalls when launching an AI-driven application

Despite best intentions, many launches falter. Here are frequent pitfalls we have seen.

3.1 Over-engineering the model too early

Developing overly complex models before validating user value is a common trap. If you haven’t clearly defined benefit and business metrics, you may waste time on features that don’t matter.

Why this fails: high model cost + low adoption = poor ROI.

3.2 Ignoring data readiness or underestimating fallback

If data is incomplete, unclean or biased, then model outcomes will suffer. Moreover, lack of a fallback plan for “when the model fails” leads to user-frustration.

Why this fails: negative user experience → abandonment.

3.3 Poor UX for AI outputs

Model output without clear user interface design often confuses users. If suggestions are unclear, lack context, or there’s no transparency, users may mistrust the app.

Why this fails: low adoption, low retention.

3.4 Scaling oversight

Seeing success in pilot but failing at production scale (performance degradation, cost explosion, data piping issues) is common.

Why this fails: high operational cost, slow performance, user drop-off.

3.5 Neglecting cross-functional ownership

If product, marketing, engineering, data science, operations are siloed, the launch often lacks coherence: unclear accountability, mis-aligned KPIs.

Why this fails: conflicting priorities, launch delays, insufficient resources.

3.6 Ignoring ethical/ regulatory risks

Launching without assessing bias, fairness, data protection, model explainability or adversarial risks invites regulatory scrutiny and loss of user trust.

Why this fails: brand damage, legal cost, user abandonment.

3.7 Insufficient monitoring and feedback loop

After launch, complacency is dangerous. Without ongoing monitoring of model drift, data changes or user behaviour shifts, performance degrades.

Why this fails: degraded accuracy, rising errors, negative ROI.

4. Practical launch checklist (for our next project)

Here is a practical checklist we use in our engagements when launching an AI-driven application:

-

✅ Define target use case & user value

-

✅ Select Key Performance Indicators (KPIs): user adoption, conversion lift, retention, cost reduction

-

✅ Confirm data readiness: sources, quality, labelling, pipelines in place

-

✅ Build prototype/MVP integrating model + UX

-

✅ Conduct pilot: monitor model accuracy + user feedback + system performance

-

✅ Establish infra for production: monitoring, logging, autoscaling, versioning

-

✅ Establish governance: cross-functional team, ethics review, regulatory compliance

-

✅ Develop training and adoption plan for users (internal/external)

-

✅ Launch minimum viable product to broader user base, track KPIs

-

✅ Monitor continuously: model drift, data change, user behaviour; feed insights back into roadmap

-

✅ Plan for iteration and scaling: refine, extend features, scale performance, support growth.

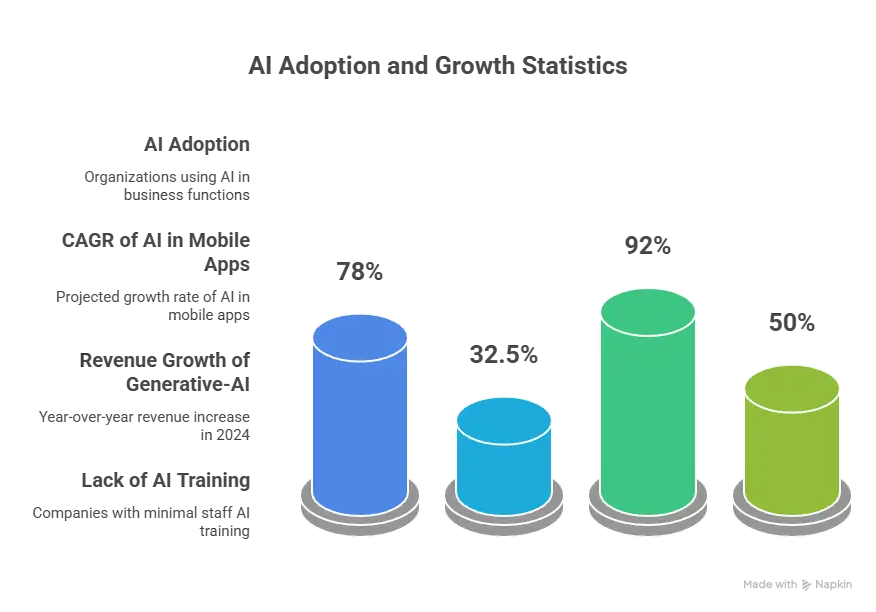

5. Some statistics to keep in mind

-

78 % of organisations report using AI in at least one business function.

-

The global “AI in mobile apps” market is expected to reach ~$354 billion by 2034 (from ~$21 billion in 2024) at ~32.5 % CAGR.

-

Generative-AI mobile apps achieved ~US$1.3 billion in revenue in 2024 (+92 % year-over-year) and nearly 1.5 billion downloads.

-

Around 50 % of companies using AI report little or no training for staff.

These figures illustrate both the substantial opportunity and the risk of under-preparation.

FAQs

1. What qualifies as an AI-driven application?

An AI-driven application is one where machine learning or artificial intelligence techniques (e.g., predictive modelling, natural language processing, computer vision, recommendation engines) are core to delivering value to the user—not just a decorative add-on. The AI must actively contribute to decision logic or adaptation rather than simply being a standard rule-based feature.

2. How do I measure success of an AI application?

Measure both model metrics (accuracy, precision/recall, latency, drift) and business/user metrics (user adoption, retention, conversion lift, cost savings, error reduction). For example, if your AI app is reducing manual processing time, measure the reduction in hours and cost. Without business-centric KPIs, you may optimise the wrong things.

3. What are the biggest risks when launching AI applications?

Key risks include: data quality issues, model bias, lack of user trust, poor UX integration, scalability failures, regulatory non-compliance, model drift and lack of monitoring, mis-alignment between product and business goals—and launching without a valid use case. Awareness of these helps mitigate them.

4. How should I plan for infrastructure and scaling of an AI application?

Plan for production-grade infrastructure from the start: scalable compute, autoscaling of model inference, monitoring/alerts, data pipelines for training and feedback, versioning, rollback capability. Perform load tests, define limits, monitor latency and cost. Scaling after launch often costs far more than designing for scale upfront.

5. What ethical or compliance issues should I consider when launching AI applications?

Consider data privacy (consent, anonymisation), algorithmic bias and fairness, transparency/explainability for users, adversarial misuse or security, regulation specific to region (e.g., GDPR, CCPA), user trust (clearly indicate when AI is used). Neglecting these issues can result in regulatory penalties, brand damage or user rejection.

Resource Center

These aren’t just blogs – they’re bite-sized strategies for navigating a fast-moving business world. So pour yourself a cup, settle in, and discover insights that could shape your next big move.

How the New AI-Assisted Skills Feature Empowers Developers to Architect Smarter Systems?

In today’s rapidly evolving digital landscape, developers face intense pressure to build complex applications faster while maintaining high standards of reliability, performance, and scalability. Traditional development methodologies are being [...]

AI-Assisted Development vs Vibe Coding: What Truly Scales in Production?

The rise of generative AI tools has transformed modern software development. From auto-completing functions to generating entire modules, AI-powered coding assistants have become part of daily workflows. Yet, not [...]

What OpenClaw Doesn’t Tell You: The Security Risks of AI with Full System Access

Over the last few months, autonomous AI agents have moved from experimental prototypes to mainstream productivity tools. Among them, OpenClaw has emerged as one of the most talked-about open-source [...]